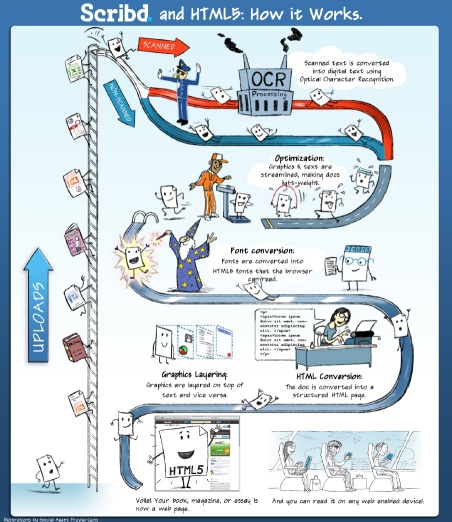

This post was written by John Engelhart, an iOS developer at Scribd and author of the JSONKit library.

So you have a lot of PNG images in your iPhone app…

When I started here at Scribd, we were just a few weeks away from launching our first iPhone app– Float.

Being a new hire obviously meant that I didn’t know the code base. Being just a few weeks away from launch obviously meant that there was a strong focus and getting something out the door. So the first thing I did is start making huge, sweeping fundamental architecture changes like swapping out all the XML REST stuff with JSON, and switching the JSON parser that was currently being used with JSONKit, because JSONKit is really, really fast. Just look at those graphs! Does it happen to parse JSON correctly? Are numbers arbitrarily and silently truncated to 32 or 64 bits haphazardly? Are floating point values preserved correctly when round tripped? Who cares! That simple graph tells me everything I need about those complicated technical issues: it’s fast! Anyone who suggested this had anything to do with the fact that I was the author of JSONKit was quickly silenced…

Oh, no, wait… that’s right, that’s not the way it happened… In reality it was obvious that no matter how much I might like to contribute to getting the app out the door, odds were that I would either slow things down or screw something important up because of my unfamiliarity with the code base. One thing that caught my eye was that the application had a lot of PNG image assets, and in my various adventures in the great city of life, I knew that you could often easily make PNG images even smaller.

This seemed like a good project that I could work on:

- It was independent of what everyone else was doing, so no one would have to stop and explain how something in the app worked.

- It was something that would probably either work or it wouldn’t. It would also be pretty unambiguous about whether or not it was causing problems.

- It could be easily and trivially backed out if a problem was found, even up until the very last second… as long as you kept the original

PNG images, which seemed pretty obvious.

- I could actually contribute to the app that was going to ship in a few weeks, even if it only meant that I “saved a few bytes that the end user has to download and takes up on their iPhone”.

Small details

In astronomy, you first enjoy three or four years of confusing classes, impossible problem sets, and sneers from the faculty. Having endured that, you’re rewarded with an eight-hour written exam, with questions like: “How do you age-date meteorites using the elements Samarium and Neodymium?” If you survive, you win the great honor and pleasure of an oral exam by a panel of learned professors.

I remember it vividly. Across a table, five profs. I’m frightened, trying to look casual as sweat drips down my face. But I’m keeping afloat; I’ve managed to babble superficially, giving the illusion that I know something. Just a few more questions, I think, and they’ll set me free. Then the examiner over at the end of the table—the guy with the twisted little smile—starts sharpening his pencil with a penknife.

“I’ve got just one question, Cliff,” he says, carving his way through the Eberhard-Faber. “Why is the sky blue?”

My mind is absolutely, profoundly blank. I have no idea. I look out the window at the sky with the primitive, uncomprehending wonder of a Neanderthal contemplating fire. I force myself to say something—anything. “Scattered light,” I reply. “Uh, yeah, scattered sunlight.”

“Could you be more specific?”

Well, words came from somewhere, out of some deep instinct of self-preservation. I babbled about the spectrum of sunlight, the upper atmosphere, and how light interacts with molecules of air.

“Could you be more specific?”

I’m describing how air molecules have dipole moments, the wave-particle duality of light, scribbling equations on the blackboard, and…

“Could you be more specific?”

An hour later, I’m sweating hard. His simple question—a five-year-old’s question—has drawn together oscillator theory, electricity and magnetism, thermodynamics, even quantum mechanics. Even in my miserable writhing, I admired the guy.

While “saving a few bytes” might seem trivial, small details like that matter to me. Whether or not someone is willing to pay attention to the small details can say a lot about them. The above quote from Clifford Stoll’s The Cuckoo’s Egg: Tracking a Spy Through the Maze of Computer Espionage is sort of like the culmination of a lot of small details– the sky is blue for a reason, often for seemingly trivial, small details… but those small details form a long, causally related chain. I think it also eloquently illustrates that while small details matter, knowing which small details matter is just as important, and the causal relationship between them. Just knowing that “Why is the sky blue?” is an interesting question can reveal just as much about someone.

There’s a lot of small, trivial details involved in something as simple as “optimizing an iOS devices PNG images”. For example, once Xcode.app has built the app, you can not modify any of the files in the applications bundle because that will invalidate its code signing. There’s also the small detail that the PNG images that end up in your applications bundle aren’t PNG standard conforming, but are actually an Apple proprietary PNG extension.

Turning iPhone PNG optimization up to eleven

Xcode.app has a build setting that you may not be aware of– Compress PNG Files, and for new Xcode.app iPhone projects it is set to Yes by default.

For the vast majority of projects the only time it is ever set is when the project was initially created… which is probably one of the reasons why you’ve never heard of it. If you did happen to notice the Compress PNG Files build setting, the only other option is No. Given these two choices, who wouldn’t want their PNG files compressed? Yes, please!

What it does

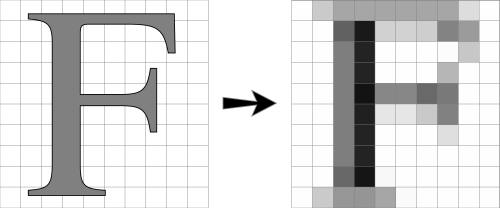

When you build your project, and the target is an iOS device, not the simulator, the Compress PNG Files build setting causes any PNG resources that are copied in to your applications bundle to go through a preprocessing step that optimizes them for iOS devices.

Apple has not published any of the details as to what it specifically means to “optimize a PNG image for iOS devices”, but others have reverse engineered at least some of it:

- Extra critical chunk (

CgBI).

- Byteswapped (

RGBA –> BGRA) pixel data, presumably for high-speed direct blitting to the framebuffer.

zlib header, footer, and CRC removed from the IDAT chunk.- Premultiplied alpha (

color′ = color * alpha / 255).

Like gzip -9, except this one goes to gzip -11

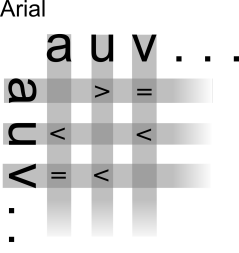

Most PNG optimization tools tend to perform optimizations at the PNG level, such as:

- Color reduction (i.e., 24-bit

RGB to 256 indexed color conversion, etc).

- Bit depth reduction (i.e., 8-bits per Red, Green, and Blue to 4-bits per).

- Optimizing some of the

zlib libraries user tunable settings.

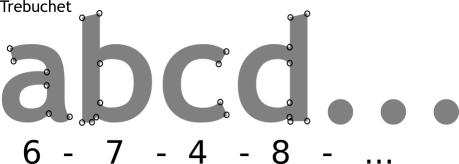

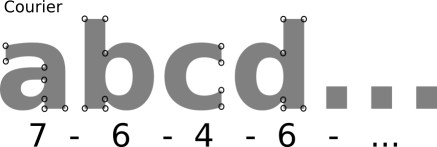

PNG filter optimization.

The PNG standard specifies a number of predefined filters that can be applied to an image that can often improve compression. It’s difficult to tell in advance which filter will give the best results for a particular image, so PNG optimizers usually try several of them. As you can probably imagine, the number of combinatorial permutations of different options grows rather quickly, so there is usually an option to specify how many of the different permutations will be tried in an effort to optimize the PNG images size. As is often the case with such brute force techniques, the amount of time it takes to try the different permutations tends to grow exponentially, and the improvements gained for the extra effort tend to shrink inverse exponentially– the dreaded diminishing returns, where more and more work gets you less and less of an improvement.

One PNG optimization tool stands apart from the rest, however: the advpng optimizer from the AdvanceCOMP recompression utilities. This PNG optimizer does most of its optimization at the zlib level– instead of using the standard zlib library, it uses the RFC 1950 (the standard that defines the zlib compression format) implementation from 7-Zip / LZMA compression engine instead. Most of the time, the 7-Zip / LZMA RFC 1950 / zlib compression engine is able to do a better job, and thus produce a smaller compressed result, than the standard zlib library at its maximum compression setting.

However, the advpng tool does not perform any of the optimization strategies that the common PNG optimizers use, and in fact will undo any of the optimizations that they performed when it recompresses the result using the 7-Zip / LZMA compression engine. And you can forget about using it on quirky, proprietary PNG image formats that aren’t PNG standards compliant…

What would be great is…

The majority of a PNG image is contained in the IDAT chunk– it contains the actual pixels that make up the image. The IDAT chunk is compressed using standard RFC 1950 / zlib compression. What’s really needed is a tool that just recompresses the IDAT chunk chunk using the 7-Zip / LZMA compression engine, while leaving everything else unmodified.

Well, Good News, Everyone! Just such a tool exists: the advpngidat tool, which is part of Scribds AdvanceCOMP fork on github.com. Not only that, it happens to work correctly with Apples non-standard PNG format! This means you can make the PNG images in your iOS applications bundle even smaller. Naturally, your milage may vary, and it wont be able to make every PNG smaller, but it can usually compress your iOS PNG images an additional 5% – 7%.

Turning Xcode.app up to eleven

So how do you turn your iOS projects PNG compression up to eleven using Xcode.app? You use Scribds Xcode.app PNG optimizer enhancement, also available on github.com.

Important: Scribds Xcode.app PNG optimizer enhancement directly modifies configuration files that are private to Xcode.app!

While the Xcode.app PNG optimizer enhancement modifies private Xcode.app files, the changes it makes are relatively benign:

- It modifies some

.xcspec files that are used to enable the Compress PNG Files build setting in the GUI by changing the build setting from a boolean to a multiple choice.

- It modifies some related files to modify and add descriptions that are displayed info help and info displays.

- It modifies some

perl and shell scripts that perform the actual copy and “optimize the PNG image for iOS devices” so that, depending on the additional build setting options, pass the optimized PNG image to advpngidat for additional compression.

The end result is this: The Compress PNG Files, which was a simple Yes / No boolean setting, turns in to a multiple choice build setting:

| Setting |

Description |

None |

Identical to the unmodified Compress PNG Files No setting. |

Low |

Identical to the unmodified Compress PNG Files Yes setting. This uses the Apple proprietary version of pngcrush to optimize PNG files for iOS devices. |

Medium |

The compressed PNG files from the Low setting are further optimized by the advpngidat command. |

High |

The same as Medium, except a handful of carefully chosen -m compression methods that work much better in practice are used instead of the default heuristic used by pngcrush. |

Extreme |

The same as Medium, except pngcrush is passed the -brute option which tries all of the compression method permutations.

Warning: This can take a very long time! |

It even goes to twelve, but your puny iOS device can’t handle it…

Unfortunately, you should not use the High and Extreme settings. While iOS versions < 5.0 had no problems with PNG images compressed with either setting, iOS 5.0 will not correctly display PNG images compressed at either High or Extreme. Although it depends on the particulars of the image, some images will be displayed using the wrong colors. Of course, there could be other problems as well, as the image format is an unpublished, non-standard PNG extension.

That being said, the Medium compression setting seems to work just fine– the only optimization it does is recompress the IDAT chunk using a better RFC 1950 / zlib compression engine. Everything else in the PNG file is passed through unmodified.

Help fight random entropy!

Take a look at Scribds AdvanceCOMP fork and Xcode.app PNG optimizer enhancement (which requires the advpngidat tool from the AdvanceCOMP fork), both available on github.com. After reading the documentation, and assuming you’re comfortable with modifying some of Xcode.apps private files, install them both.

Once installed, simply set your Xcode.app iOS projects Compress PNG Files build setting to Medium, and do your part in the fight against random entropy!

Just how many useless bytes were saved?

| Setting |

Size (bytes) |

Δ Low |

Δ Extreme |

Low |

9740448 |

100.0% |

131.3% |

Medium |

8969108 |

92.1% |

120.1% |

High |

7756942 |

79.6% |

104.6% |

Extreme |

7418479 |

76.2% |

100.0% |

As previously mentioned, a problem was discovered with iOS 5.0 with some images compressed using either High or Extreme. This is most likely due to the fact that the Apple proprietary “optimized for iOS devices” format seems to only use a PNG filter setting of None. This means that the decompressed result can be used without any additional per-pixel filter processing.

So, in the end, we were only able to use the Medium setting, which only optimizes a PNG images IDAT chunk, leaving the rest of the bytes completely unmodified. Still, this resulted in a savings of 7.9%, which translates in to nearly 753K-bytes shaved off the final application bundle.

One more thing…

The advpngidat compression tool isn’t just for “optimized for iOS devices” PNG images, it can be used on regular PNG images too. This can be a useful addition to any work flow that passes PNG images through one of the common PNG optimization tools (i.e., optipng and pngcrush). As an example, any web site that has a large number of static PNG images can use a simple shell script to process all of the static PNG images with something like optipng, and then process the optipng results with advpngidat.

In fact, the advpngidat tool effectively does what is on the roadmap for the optipng tool:

… which is exactly what advpngidat does today– the only “optimization” it performs is it recompresses the IDAT chunk using the “powerful 7zip deflation” compressor. If the recompressed result happens to be bigger than the original, then the PNG image is left unmodified. Otherwise, the PNG image is replace with the smaller, optimized result.

This is really something that every web site with static PNG images should do. You only need to perform the “optimization” on an image once, and every request for that PNG image after that point will use the smaller, optimized result. You don’t have to be a rocket scientist to figure out the benefits: less bytes to send means pages load that much faster, and if you happen to pay for the amount of bandwidth you use… it means a simple, one time run through advpngidat can save you real money.